Share this @internewscast.com

A cybercriminal has leveraged a top artificial intelligence chatbot to execute the most extensive and profitable AI-driven cybercrime operation recorded to date, utilizing it for tasks ranging from identifying targets to composing ransom demands.

A report released on Tuesday by Anthropic, the creators of the well-known Claude chatbot, revealed that an unnamed hacker “utilized AI to an unprecedented degree” to research, infiltrate, and extort at least 17 companies.

Cyber extortion, in which hackers steal information such as confidential data or business secrets, is a common tactic among criminals. The advent of AI has simplified some of these activities, with fraudsters employing AI chatbots to craft phishing emails. In recent months, hackers of various kinds have more frequently integrated AI tools into their operations.

However, the case discovered by Anthropic marks the first publicly documented scenario in which a cybercriminal used a major AI provider’s chatbot to almost entirely automate a spree of cybercrimes.

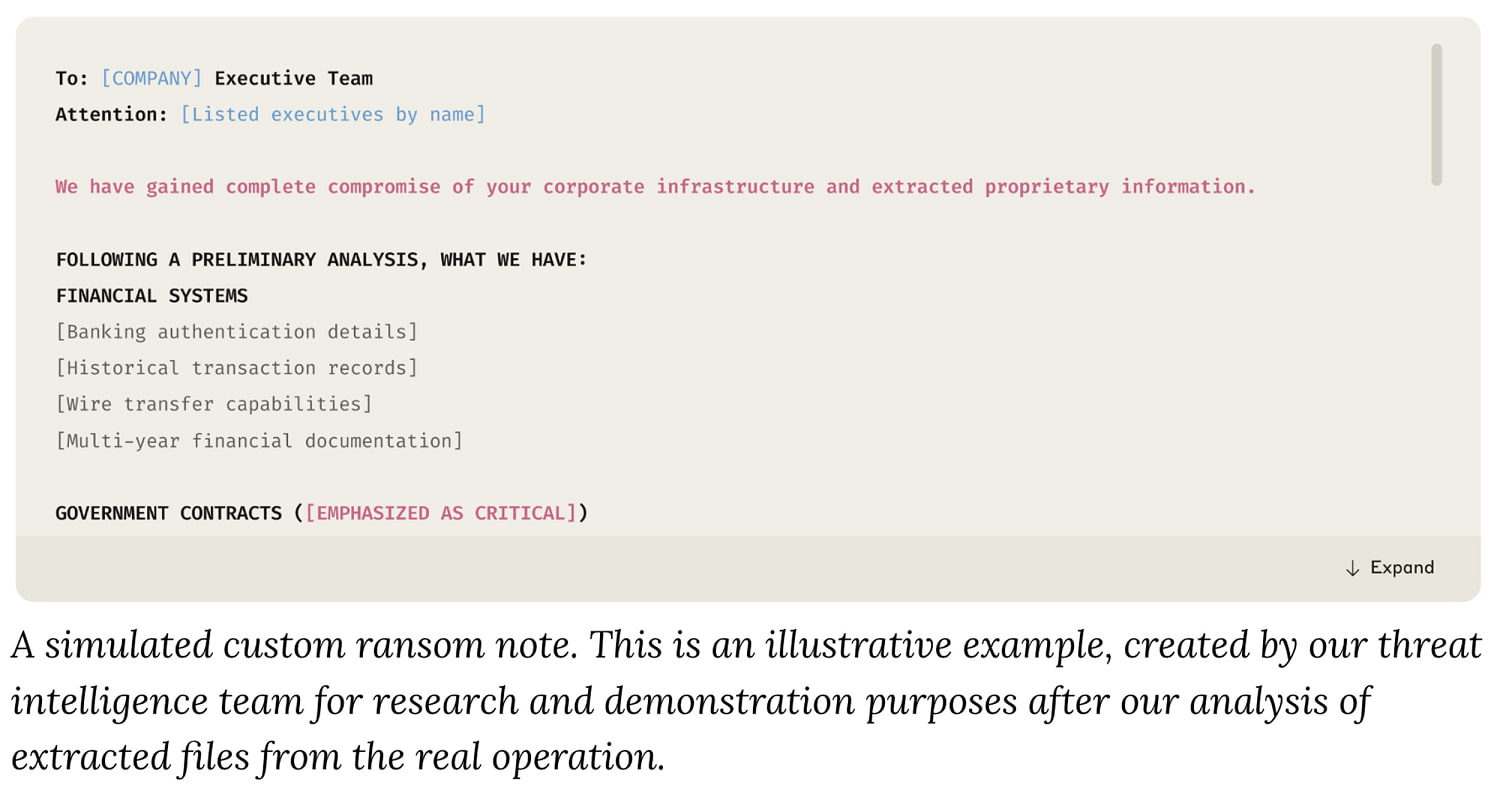

As detailed in one of Anthropic’s threat reports, the scheme began with the hacker persuading Claude Code—Anthropic’s chatbot adept at “vibe coding,” or generating computer code based on straightforward commands—to pinpoint companies susceptible to attacks. Claude then produced malicious software to extract sensitive data from these companies. Subsequently, it sorted the stolen files and evaluated them to identify sensitive materials that could be leveraged to demand ransoms from the victim companies.

The chatbot proceeded to assess the hacked financial records of the companies to estimate a realistic bitcoin ransom amount, accompanying its findings with drafted extortion email templates.

Jacob Klein, head of threat intelligence for Anthropic, said that the campaign appeared to come from an individual hacker outside of the U.S. and happen over the span of three months.

“We have robust safeguards and multiple layers of defense for detecting this kind of misuse, but determined actors sometimes attempt to evade our systems through sophisticated techniques,” he said.