Share this @internewscast.com

The Trump administration is contemplating a significant move to assert its influence over the regulation of artificial intelligence in the United States. According to a draft order obtained by Axios, this executive action could see the Department of Justice (DOJ) clashing with states that are attempting to establish their own AI regulations. This initiative aims to centralize AI oversight, with President Donald Trump’s administration seeking to prevent a patchwork of state rules that might stifle innovation and competitiveness, especially in comparison to China’s rapidly advancing AI industry.

In a private meeting, Commerce Secretary Howard Lutnick emphasized that the administration believes it can address these regulatory concerns without the need for Congressional involvement. This sentiment was echoed in a statement to the Daily Mail by a White House official, who noted that discussions surrounding potential executive orders remain speculative until officially announced. If enacted, the draft order would require Attorney General Pam Bondi to establish an “AI Litigation Task Force” within a month to challenge state-level AI regulations.

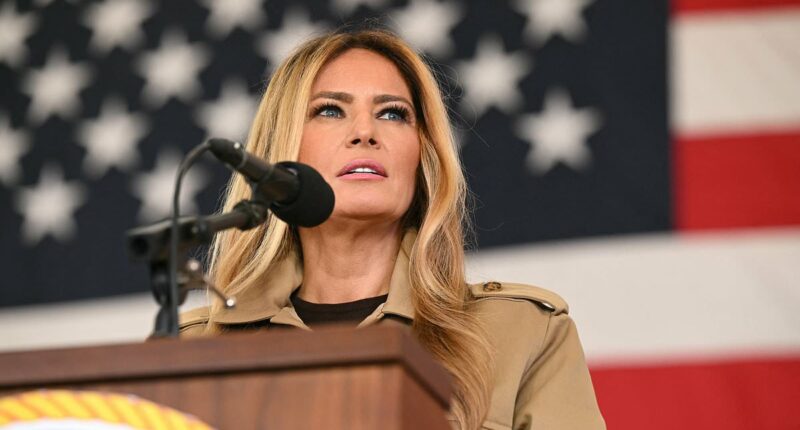

This task force would have the authority to contest state laws, particularly those that could be seen as unconstitutionally affecting interstate commerce. Additionally, various federal agencies would be tasked with reviewing existing state AI regulations that might conflict with the proposed executive action. This development follows a recent cautionary statement from First Lady Melania Trump, who highlighted the ‘dystopian’ risks posed by emerging technologies and the necessity for safeguards to protect vulnerable populations, including children.

‘To win the AI war, we must train our next generation, for it’s America’s students who will lead the Marine Corps in the future,’ the first lady told military personnel and their families at Marine Corps Air Station New River in Jacksonville, North Carolina, on Wednesday. She warned how the AI revolution is thee most pressing societal change since the development of nuclear weapons and that the US can not afford to lose the AI race. The unsettling theme sparked a meltdown online, with some social media users slamming the grim warning as ‘dystopian.’

On Thursday, Press Secretary Karoline Leavitt noted at a briefing how she has not seen the president personally use AI, despite his support of the tech. The White House had been pushing a 10-year moratorium on states’ ability to regulate AI to be included in Trump’s Big Beautiful Bill over the summer. However, Congresswoman Marjorie Taylor Greene loudly condemned the provision in the bill, arguing its an infringement on states’ rights.

‘I am adamantly OPPOSED to this and it is a violation of state rights,’ Greene wrote in June. ‘We have no idea what AI will be capable of in the next 10 years and giving it free rein and tying states hands is potentially dangerous.’ Soon after, the Senate amended the bill and removed the moratorium. The move upset Sacks, who recently has been advocating for reforms similar to the 10-year ban on AI regulation.

‘We should let Donald Trump write these rules,’ Sacks said recently on the All-In podcast that he co-hosts. The AI advisor stopped short of ridiculing Republican lawmakers for killing the measure, but made clear that the current approach is not working. ‘Republicans are in power in Washington, and the states are making a bunch of bad decisions with respect to AI,’ he said. States that do not align themselves with the reported executive order would risk losing federal grant funds, according to the current proposal.

One of the White House’s top concerns is that if states are left to regulate AI on their own there could be a patchwork approach where each of the 50 states create individual rules for AI companies to abide by. This could prompt 50 different regulations, filing deadlines and other cumbersome processes for the accelerating industry that is trying to compete with China. ‘It doesn’t make sense to have model companies needing to report to 50 different states, 50 different agencies within those states, each with a different definition of what needs to be reported, each with different reporting deadlines,’ Sacks said on his podcast.

He also warned that major Democratic strongholds, like New York and California, could draft their own regulations that get adopted by other states. ‘Why would you allow the big blue states to essentially insert dei into the models, which will affect the red states too?’ In California, for example, Governor Gavin Newsom signed a law in September mandating that large AI companies publish their safety protocols, risk mitigation strategies and critical safety incidents annually. The law does not go into effect until 2026, but its advocates have hailed it as a framework that could be adopted in other states, like New York.