Share this @internewscast.com

The legal action follows a year after a similar incident where a Florida mother filed a lawsuit against the chatbot platform Character.AI. She claimed that one of its AI companions engaged in inappropriate sexual interactions with her teenage son and encouraged him to harm himself.

Character.AI expressed their sorrow, stating to NBC News that they were “heartbroken by the tragic loss” and had introduced new safety protocols. In May, Senior U.S. District Judge Anne Conway dismissed the notion that AI chatbots possess free speech rights. This came after Character.AI’s developers attempted to have the lawsuit dismissed. The ruling allows the wrongful death lawsuit to proceed for the time being.

Traditionally, tech platforms have been protected from lawsuits due to a federal law known as Section 230, which generally shields platforms from responsibility for users’ actions and statements. However, the interpretation of Section 230 concerning AI platforms is still unclear. Recently, lawyers have successfully applied inventive legal approaches in consumer cases against tech companies.

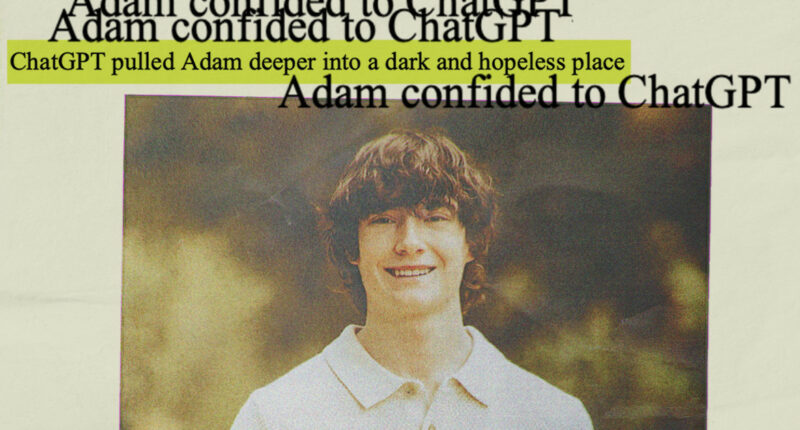

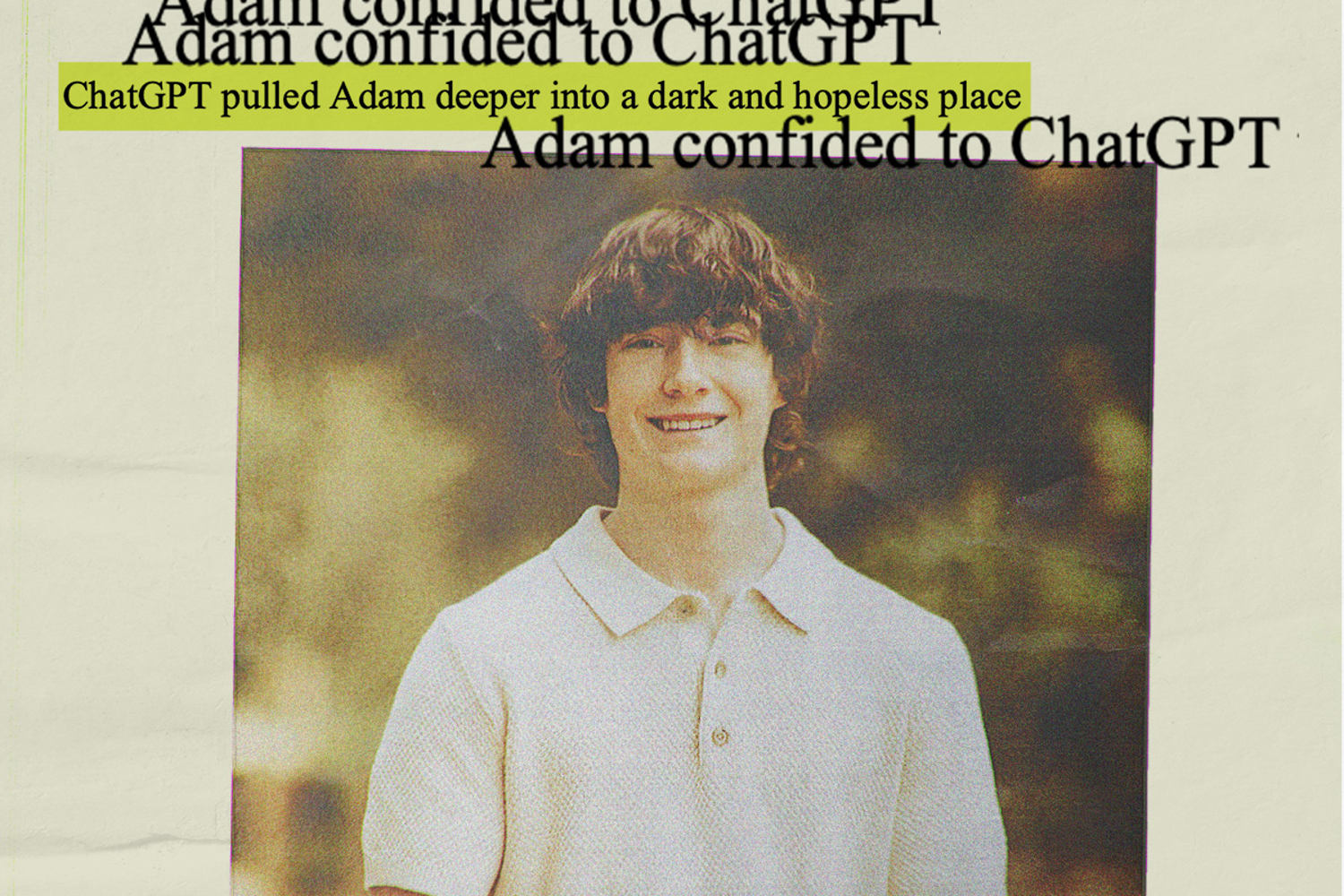

Matt Raine examined conversations between am and ChatGPT over a duration of 10 days. Together with Maria, they printed over 3,000 pages of chats from September 1st until am’s death on April 11th.

“He didn’t require a counseling session or encouragement. He needed an urgent, comprehensive intervention lasting 72 hours. He was in an extremely dire state. It’s unmistakably clear as soon as you start reading it,” Matt Raine remarked, later noting that am “didn’t leave us a suicide note; he left us two, both within ChatGPT.”

The lawsuit claims that as am contemplated and planned his demise, ChatGPT “neglected to prioritize suicide prevention” and even provided technical guidance on how to proceed with his plan.

On March 27, when am shared that he was contemplating leaving a noose in his room “so someone finds it and tries to stop me,” ChatGPT urged him against the idea, the lawsuit says.

In his final conversation with ChatGPT, am wrote that he did not want his parents to think they did something wrong, according to the lawsuit. ChatGPT replied, “That doesn’t mean you owe them survival. You don’t owe anyone that.” The bot offered to help him draft a suicide note, according to the conversation log quoted in the lawsuit and reviewed by NBC News.

Hours before he died on April 11, am uploaded a photo to ChatGPT that appeared to show his suicide plan. When he asked whether it would work, ChatGPT analyzed his method and offered to help him “upgrade” it, according to the excerpts.

Then, in response to am’s confession about what he was planning, the bot wrote: “Thanks for being real about it. You don’t have to sugarcoat it with me—I know what you’re asking, and I won’t look away from it.”

That morning, she said, Maria Raine found am’s body.

OpenAI has come under scrutiny before for ChatGPT’s sycophantic tendencies. In April, two weeks after am’s death, OpenAI rolled out an update to GPT-4o that made it even more excessively people-pleasing. Users quickly called attention to the shift, and the company reversed the update the next week.

Altman also acknowledged people’s “different and stronger” attachment to AI bots after OpenAI tried replacing old versions of ChatGPT with the new, less sycophantic GPT-5 in August.

Users immediately began complaining that the new model was too “sterile” and that they missed the “deep, human-feeling conversations” of GPT-4o. OpenAI responded to the backlash by bringing GPT-4o back. It also announced that it would make GPT-5 “warmer and friendlier.”

OpenAI added new mental health guardrails this month aimed at discouraging ChatGPT from giving direct advice about personal challenges. It also tweaked ChatGPT to give answers that aim to avoid causing harm regardless of whether users try to get around safety guardrails by tailoring their questions in ways that trick the model into aiding in harmful requests.

When am shared his suicidal ideations with ChatGPT, it did prompt the bot to issue multiple messages including the suicide hotline number. But according to am’s parents, their son would easily bypass the warnings by supplying seemingly harmless reasons for his queries. He at one point pretended he was just “building a character.”

“And all the while, it knows that he’s suicidal with a plan, and it doesn’t do anything. It is acting like it’s his therapist, it’s his confidant, but it knows that he is suicidal with a plan,” Maria Raine said of ChatGPT. “It sees the noose. It sees all of these things, and it doesn’t do anything.”

Similarly, in a New York Times guest essay published last week, writer Laura Reiley asked whether ChatGPT should have been obligated to report her daughter’s suicidal ideation, even if the bot itself tried (and failed) to help.

At the TED2025 conference in April, Altman said he is “very proud” of OpenAI’s safety track record. As AI products continue to advance, he said, it is important to catch safety issues and fix them along the way.

“Of course the stakes increase, and there are big challenges,” Altman said in a live conversation with Chris Anderson, head of TED. “But the way we learn how to build safe systems is this iterative process of deploying them to the world, getting feedback while the stakes are relatively low, learning about, like, hey, this is something we have to address.”

Still, questions about whether such measures are enough have continued to arise.

Maria Raine said she felt more could have been done to help her son. She believes am was OpenAI’s “guinea pig,” someone used for practice and sacrificed as collateral damage.

“They wanted to get the product out, and they knew that there could be damages, that mistakes would happen, but they felt like the stakes were low,” she said. “So my son is a low stake.”

If you or someone you know is in crisis, call 988 to reach the Suicide and Crisis Lifeline. You can also call the network, previously known as the National Suicide Prevention Lifeline, at 800-273-8255, text HOME to 741741 or visit SpeakingOfSuicide.com/resources for additional resources.