Share this @internewscast.com

This morning, Google is asserting its leadership in AI with its Gemini AI models that drive a variety of new features and products across Google’s platforms.

“And the world is responding, adopting AI faster than ever before,” he said.

“What all this progress means is that we’re in a new phase of the AI platform shift.

“Where decades of research are now becoming reality for people, businesses and communities all over the world.”

From a Wall Street perspective, this is a big deal.

Google is vying against public companies like Microsoft and Meta, while the developer of ChatGPT, OpenAI, maintains a notable presence in public perception regarding AI.

This is why Google was so keen today to show benchmarks which demonstrate the strong performance of its Gemini models over and above the competition.

For AI users and indeed internet users at large, Google’s upcoming updates promise to transform how we engage with the internet.

Firstly, Google is launching (in the US first) a new “AI Mode” in their main search engine.

AI Mode will be its own tab on the Google page alongside news, images and others.

Building on the success of their AI Summaries in search, AI Mode allows searchers to be far more comprehensive in their search query and receive a far more detailed search result.

Think of it this way: instead of making a search and getting a list of results which are links to other websites you can read, in AI Mode you can make a request and essentially Google’s Gemini will not just find the information you are looking for, but will compile it into what will look like a comprehensive report on the query.

This is a complete change to the way the internet works, going from a query, to a list, to then visiting links online, to simply asking a question and getting an answer.

This is the future for information search, research and other forms of queries.

Likewise, Google is looking to change the way we shop online.

Right now, there are billions of products which appear as “Products” in a Google Search.

Using what Google calls “Agentic Shopping”, you will be able to not only be more verbose about your shopping search, but put a price watch on a product.

If the listed price is higher than what you expected, or are willing to pay, you can set a price at which you would buy.

Then, when or if the price reduces, you are given a notification of the reduction and the option to “Buy for me” – which will send your Google Shopping agent off to the online store to make the purchase for you, because it has your payment information, shipping details and personalised shopping request.

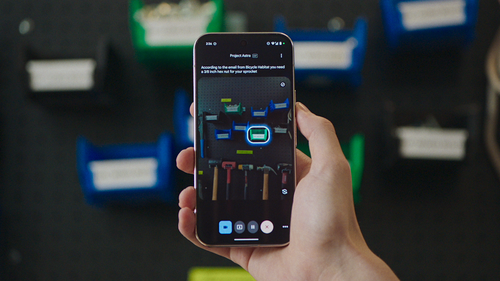

One of the most futuristic demonstrations at Google I/O today was the real-time personal assistant, which Google envisages will exist within their Gemini Live app.

Demonstrating the concept in a video showing a man trying to fix his bike, the man was having a real-time conversation with his Gemini assistant whose natural language and speech made it sound like a real person.

He was able to use the phone’s camera to give the assistant “eyes” to assist him, and the Gemini assistant was also able to take on tasks to perform in the background, such as making a phone call to a bike shop to check on the availability of a part.

That real human-like phone call technology has been demonstrated by Google as far back as seven years ago, but integrating it into a real-time assistant could be a game changer in the AI assistant space with AI able to draw on not just the information available on the internet, but in real-time from real people on the other end of the phone.

Google also demonstrated its latest iteration of what they call “Android XR”, an operating system for augmented reality headsets, or glasses.

A consumer space currently dominated by Meta’s partnership with RayBan, it appears more likely than ever that the original idea of “Google Glass” over a decade ago will come to fruition in some form very, very soon.

Trevor Long travelled to San Francisco with support from Google Australia.