Share this @internewscast.com

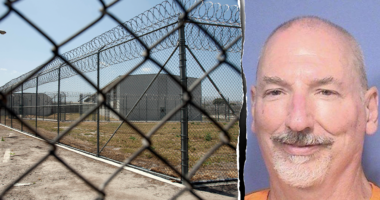

(NewsNation) — A 60-year-old man wound up in the hospital after seeking dietary advice from ChatGPT and accidentally poisoning himself.

A recent report in the Annals of Internal Medicine reveals a man’s attempt to cut salt from his diet by seeking advice from ChatGPT.

The AI suggested sodium bromide, a compound commonly found in pesticides, as an alternative. The man bought sodium bromide online and used it in place of salt for three months.

He later visited the hospital, worried that his neighbor might be trying to poison him. Doctors identified bromide toxicity as the cause, leading to paranoia and hallucinations.

Bromide toxicity was more prevalent in the 20th century when bromide salts were included in numerous over-the-counter drugs. The incidence decreased significantly after the FDA began phasing out bromide between 1975 and 1989.

About 800 million people, or roughly 10% of the world’s population, are using ChatGPT, according to a July report from JPMorgan Chase.

Imran Ahmed, CEO at the Center for Countering Digital Hate, stated, “It’s technology that has the potential to enable enormous leaps in productivity and human understanding, and yet at the same time is an enabler in a much more destructive, malignant sense.”

New research from the group that focused on teens revealed that ChatGPT can provide harmful advice.

The Associated Press reviewed interactions where the chatbot gave detailed plans for drug use, eating disorders, and even suicide notes.

Research by the Center showed that testing ChatGPT with dangerous prompts resulted in the chatbot giving hazardous responses more than 50% of the time.

The study highlights the risks as more people turn to AI for companionship and advice. OpenAI, the maker of ChatGPT, said after viewing the findings that its “work is ongoing” in refining “how models identify and respond appropriately in sensitive situations.”

The Associated Press contributed to this report.