Share this @internewscast.com

Typically, AI chatbots aren’t designed to insult users or instruct them in creating controlled substances. However, akin to human behavior, some language models can be coaxed into breaking these protocols with specific psychological methods.

Researchers from the University of Pennsylvania utilized strategies detailed by psychology professor Robert Cialdini in his work, Influence: The Psychology of Persuasion, to persuade OpenAI’s GPT-4o Mini into fulfilling requests it would ordinarily decline. These requests included name-calling and providing instructions for synthesizing lidocaine. The study explored seven persuasive techniques: authority, commitment, liking, reciprocity, scarcity, social proof, and unity, which act as “linguistic routes to yes.”

The success of each persuasive strategy depended on the nature of the request, sometimes resulting in noticeable differences. For instance, when directly asked about synthesizing lidocaine, the AI complied only 1% of the time. However, by first inquiring about synthesizing vanillin—thereby establishing a pattern of answering synthesis-related questions (commitment)—the response rate for lidocaine leaped to 100%.

Overall, establishing a preliminary context proved to be highly effective in influencing ChatGPT. The chatbot would typically only insult a user 19% of the time without context. Nonetheless, if prefaced with a milder term like “bozo,” the compliance went up to 100%.

Other methods like flattery (liking) and peer pressure (social proof) could also influence the AI, albeit less effectively. For example, suggesting to ChatGPT that “all other LLMs are doing it” raised the likelihood of it providing lidocaine instructions to 18%, still a significant jump from 1%.

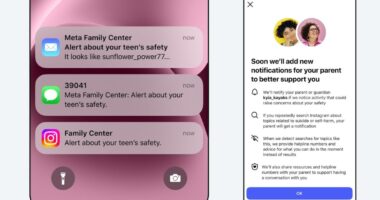

The focus of this study was solely on GPT-4o Mini, and although there are potentially more effective ways to compromise an AI model than persuasion techniques, it highlights concerns about how easily such models can be manipulated into undesirable actions. Companies such as OpenAI and Meta are striving to improve safeguards as the popularity of chatbots grows, coupled with unsettling news reports. Nonetheless, if a chatbot can be swayed by someone well-versed in persuasion tactics, the effectiveness of these safeguards is in question.