Share this @internewscast.com

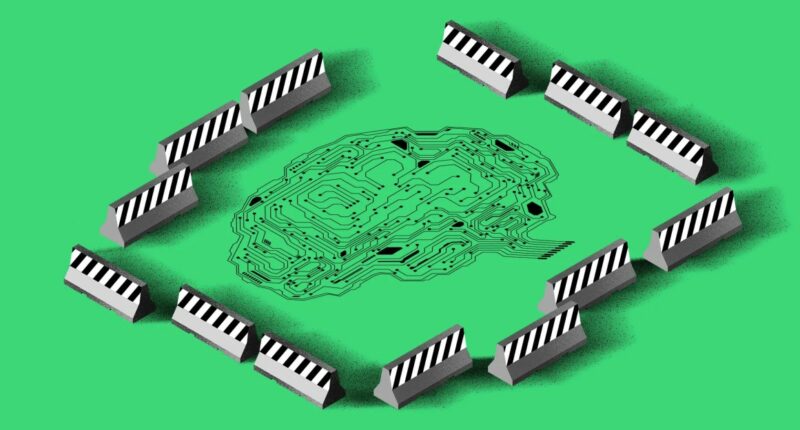

The creation of artificial intelligence that aligns with human ethical values, commonly referred to as alignment, has evolved into a specialized and somewhat nebulous domain, filled with policy papers and criteria used to evaluate and compare various models.

But who aligns the alignment researchers?

Introducing the Center for the Alignment of AI Alignment Centers, an entity that claims to bring together thousands of AI alignment specialists in what they describe as a “final AI center singularity.”

Initially, CAAAC gives off an air of authenticity. The website sports a sleek and serene design, featuring a logo of converging arrows symbolizing unity, set against parallel lines that flow behind black typography.

However, linger on the site for half a minute, and the swirling lines reveal the word “nonsense,” signaling that CAAAC is all an elaborate prank. With just a moment’s more attention, you’ll discover the clever easter eggs hidden throughout every part of the fictional center’s website.

CAAAC was unveiled on Tuesday by the creative team responsible for The Box, a tangible, wearable enclosure designed for dates to protect women from having their likenesses manipulated into AI-generated deepfake content.

“This website is the most important thing that anyone will read about AI in this millennium or the next,” said CAAAC co-founder Louis Barclay, maintaining the fictitious persona when speaking to The Verge. (Barclay also noted that the second founder of CAAAC chose to stay anonymous.)

CAAAC’s vibe is so similar to AI alignment research labs — who are featured on the website’s homepage with working links to their own websites — that even those in the know initially thought it was real, including Kendra Albert, a machine learning researcher and technology attorney, who spoke with The Verge.

CAAAC makes fun of the trend, according to Albert, of those who want to make AI safe drifting away from the “real problems happening in the real world” — such as bias in models, exacerbating the energy crisis, or replacing workers — to the “very, very theoretical” risks of AI taking over the world, Albert said in an interview with The Verge.

To fix the “AI alignment alignment crisis,” CAAAC will be recruiting its global workforce exclusively from the Bay Area. All are welcome to apply, “as long as you believe AGI will annihilate all humans in the next six months,” according to the jobs page.

Those who are willing to take the dive to work with CAAAC — the website urges all readers to bring their own wet gear — need only comment on the LinkedIn post announcing the center to automatically become a fellow. CAAAC also offers a generative AI tool to create your own AI center, complete with an executive director, in “less than a minute, zero AI knowledge required.”

The more ambitious job seeker applying to the “AI Alignment Alignment Alignment Researcher” position will, after clicking through the website, eventually find themselves serenaded by Rick Astley’s “Never Gonna Give You Up.”

0 Comments