Share this @internewscast.com

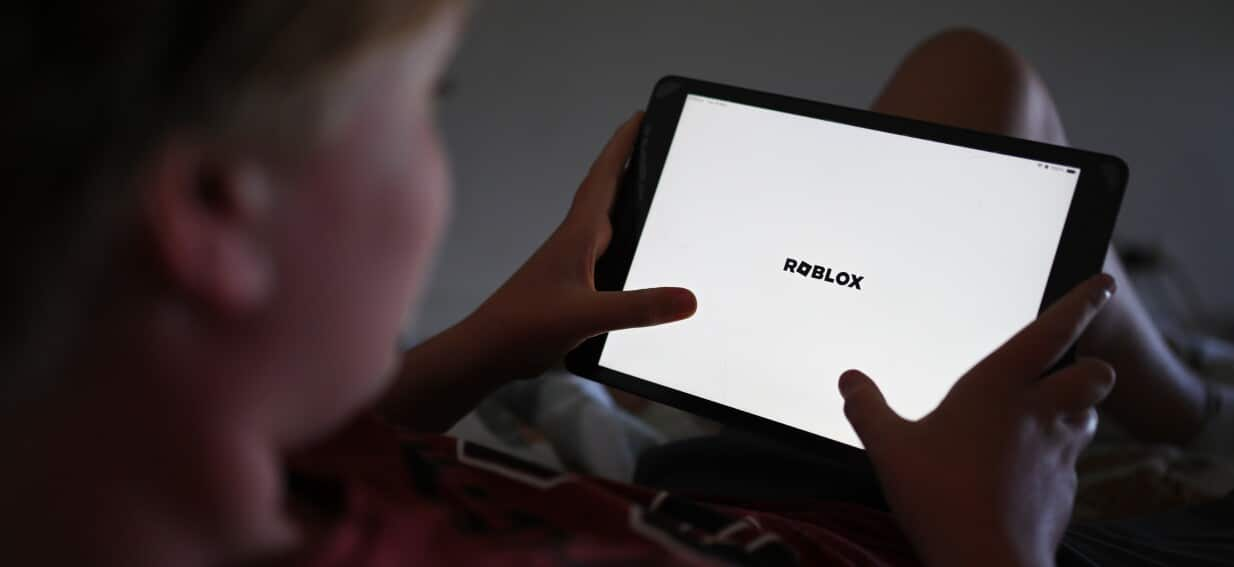

Roblox, a highly debated gaming platform, has been under scrutiny following ongoing allegations that it is being used to expose children to sexually explicit and suicidal content.

In response, Communications Minister Anika Wells has called for an immediate discussion with the platform, coming just two months after Australia’s pioneering social media restrictions were put in place.

Wells expressed her deep concern over reports suggesting that young users of Roblox are encountering shocking and inappropriate content created by other users.

“What is even more alarming are the accounts of predators targeting children, seeking to manipulate their natural curiosity and innocence,” Wells stated.

Australia’s regulations, which took effect on December 10, mandate that digital platforms verify users’ ages, prohibiting access to those under 16.

Ten digital platforms, including Google’s YouTube, Meta’s Facebook, Instagram, and Threads, alongside Snapchat, Reddit, and TikTok, have been required to adhere to these laws.

An issue of ‘deep concern’

Roblox, which is not named under the law, revealed 60 per cent of its Australian daily active users had undertaken age checks.

The platform is not a single game, but is described as a vast ecosystem of user-created “experiences” hosted on it.

In the lead-up to the ban, parents expressed concerns over harms on Roblox, including sexually explicit and suicidal content being shared in public chats.

Wells said that content was persisting, despite Roblox engaging “extensively” with eSafety over the past two years.

“This is untenable and these issues are of deep concern to many Australian parents and carers,” she said.

eSafety Commissioner Julie Inman Grant said Roblox must immediately take action to block predators having access to children after the “horrendous” reports.

Roblox informed eSafety it delivered on its commitments under the ban, including switching off features such as direct chats and voice functions for Australian kids.

Inman Grant said the platform would be assessed for its compliance.

“We remain highly concerned by ongoing reports regarding the exploitation of children on the Roblox service, and exposure to harmful material,” she said.

“They can and must do more to protect kids, and when we meet I’ll be asking how they propose to do that.”

A digital duty of care

Platforms that decline to comply with the social media ban face fines of up to $49.5 million.

Wells has asked the internet watchdog what powers can be ramped up to combat harms on Roblox as the government works towards legislating a digital duty of care.

The proposed legal obligation is separate from the social media ban and would apply to large online platforms to take proactive, reasonable steps to prevent foreseeable harms to users.

The commissioner said codes focused on age-restricted material, including pornography and self-harm, would come into force on March 9 and apply to Roblox.

Readers seeking support can ring Lifeline crisis support on 13 11 14 or text 0477 13 11 14, Suicide Call Back Service on 1300 659 467 and Kids Helpline on 1800 55 1800 (for young people aged 5 to 25). More information is available at beyondblue.org.au and lifeline.org.au.

Anyone seeking information or support relating to sexual abuse can contact Bravehearts on 1800 272 831 or Blue Knot on 1300 657 380.

For the latest from SBS News, download our app and subscribe to our newsletter.