Share this @internewscast.com

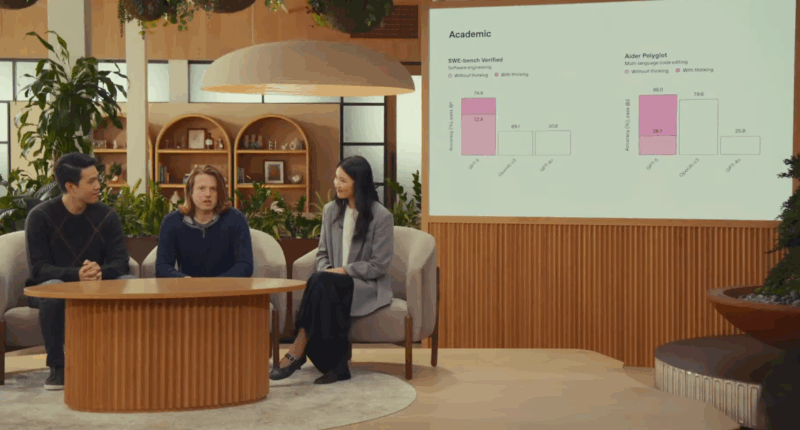

During its major GPT-5 presentation on Thursday, OpenAI unveiled several charts intended to highlight the model’s capabilities. However, upon closer inspection, some of these graphs contained inaccuracies.

One chart that aimed to illustrate GPT-5’s performance in “deception evals across models” had inconsistent scaling. For instance, it reported GPT-5 achieving a 50.0 percent deception rate in “coding deception,” yet this was compared to OpenAI’s smaller o3 score of 47.4 percent, which displayed a disproportionately larger bar.

Another chart displayed a peculiar anomaly, where GPT-5’s score was actually lower than o3’s, yet depicted with a bigger bar. Furthermore, it showed o3 and GPT-4o with differing scores but identically-sized bars. This particular chart was so problematic that CEO Sam Altman commented on it, calling it a “mega chart screwup,” and an OpenAI marketing team member apologized for what they termed an “unintentional chart crime.”

OpenAI did not immediately reply to requests for comments. While it remains unclear whether GPT-5 was used to generate the charts, these issues cast a shadow over the company’s major launch event—particularly when it was promoting the “significant advances in reducing hallucinations” achieved with the new model.