Share this @internewscast.com

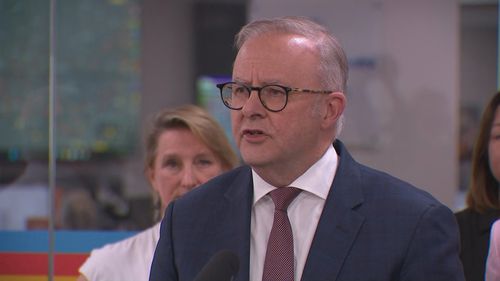

In a press briefing from Victoria’s State Control Centre, Prime Minister Anthony Albanese unveiled a substantial funding boost for fire recovery efforts. The joint contribution from federal and state governments raises the total allocation for the Commonwealth-State Disaster Recovery Funding Arrangements to $329 million.

“This funding encompasses clean-up initiatives, emergency recovery support, and assistance for businesses and local governments across various sectors,” Albanese stated.

Currently, four fires continue to burn across the state, though firefighters have successfully managed to contain all but two of these infernos.

There are still four ‘watch and act’ warnings in effect for the Walwa and Carlisle River fires, indicating that it remains too hazardous for several communities within these zones to return home.

Communities in Gellibrand, Lovat, and parts of Carlisle River remain under evacuation orders due to the ongoing threat from the Carlisle River blaze.

Similarly, residents in areas such as Berringama, parts of Bullioh, Koetong, Lucyvale, Shelley, Bucheen Creek, Cravensville, and sections of the southern Nariel Valley and surrounding regions are unable to return to their homes due to the dangers posed by the Walwa bushfire.

Warnings have been reduced in areas surrounding the fire activity zones in recent days.

The announcement comes after the federal and state governments earlier announced a joint $171 million disaster recovery payment support package for fire-impacted communities.

Those funds cover support for primary producers, residents who have experienced prolonged power outages and uninsured homeowners who have lost their properties.

NEVER MISS A STORY: Get your breaking news and exclusive stories first by following us across all platforms.